Rate limiting is a technique used to control the amount of incoming and outgoing traffic to or from a network. It restricts the number of requests a client can make to your server within a given timeframe. This is generally done to prevent abuse, ensure fair usage, and avoid overloading the server.

Why do we need to use rate limiting?

- Avoid resource starvation due to Denial of Service (DoS) attack.

- Prevent server overload.

- Control Costs.

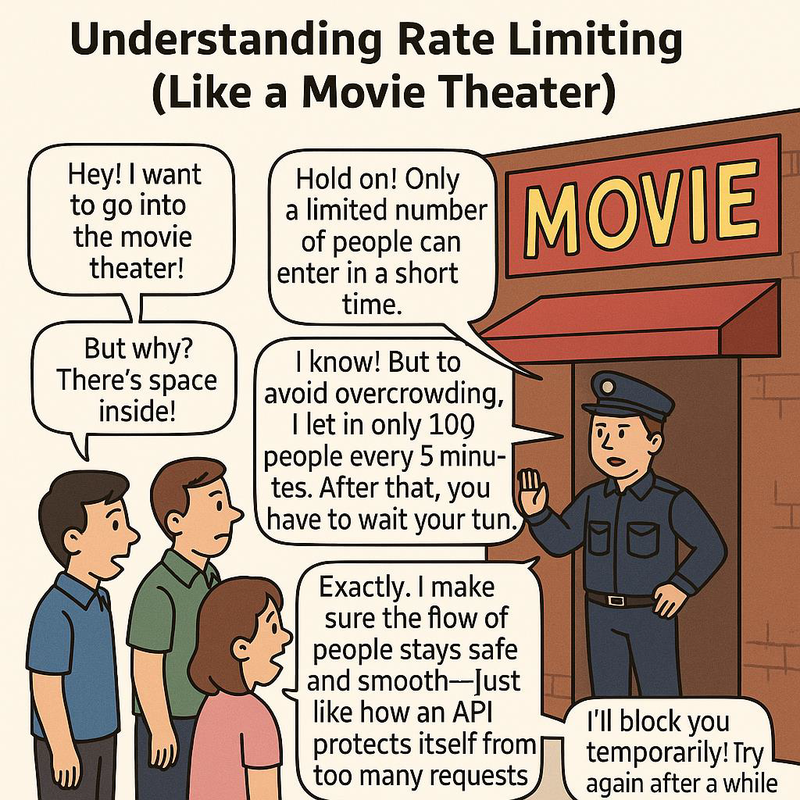

Let's illustrate the concept of rate limiting with a general example.

Armed with core concepts, we’re ready to examine deeper nuances in rate limiting.

Requirement of rate limiter API can be classified into 2 categories:

- Functional Requirements

- Non – Functional Requirements

Let’s see what those are:

Functional Requirements :

- It should allow the definition of multiple rate limiting rules.

- It should provide the proper response to clients when the rate limit exceeds.

- It should count how many requests a user has made in a time window.

Non – Functional Requirements:

- API should be highly available and scalable

- It should be secure and protected against API attacks

- The API should be easy to integrate with existing systems.

Now we all have a doubt about where to implement rate limiters, whether we need to implement them on Client Side or Server Side. Now let’s discuss that.

Rate limiting is generally implemented on the server side rather than the client side.

Why is this the preferred approach?

- Positional Advantage: The client-side rate limit would require every client to implement their own rate limiter.

- Security: Implementing rate limiters on the server side also provides better security, as it allows the server to prevent attacks from overwhelming the system with a large number of requests.

Key Components in Rate Limiter:

- Define the rate limiting policy: Begin by setting up the rules for rate limiting. This includes deciding how many requests a user can make in a certain time frame (like 100 requests per minute), the duration of that time window, and what action to take when someone goes over the limit — whether it's blocking the request, returning an error, or delaying it.

- Store request counts: The API needs to track how many requests each client makes. A common approach is to use a fast, in-memory database like Redis or a scalable store like Cassandra to keep count of requests per user or client.

- Identify the client: To apply rate limits correctly, the API must know who is making each request. This can be done by using a unique identifier such as an IP address, an API key, or a user ID.

- Handle incoming requests: Whenever a client sends a request, the API should first check if they've already hit their limit within the current time window. If they have, it should follow the policy you’ve defined (like returning an error). If not, the system should increase their request count and allow the request to go through.

- Give an API endpoint: Finally, the API should inform clients about how many requests they have left within the current time window.

Here are few ways to implement rate limiting API’s

- Using Redis

- Amazon WAF [Web Application Firewall]

- Custom Made Rate Limits

Using Redis

Database operations slow down the request, so we can use in-memory cache Redis. Basically, Redis gives you in-built commands to track down the counters.

- INCR: used for increasing counter by 1.

- EXPIRE: This is used for setting the timeout on the stored counter. This counter is automatically deleted from the storage when the timeout expires.

Amazon WAF [Web Application Firewall]

AWS WAF acts as your first line of defense, shielding your web applications and APIs from common exploits, malicious bots, and unwanted traffic. Like a digital bouncer, it carefully inspects every HTTP and HTTPS request coming to your doorstep, deciding who gets in and who gets turned away. By filtering out bad actors before they reach your servers, it helps prevent security breaches, maintains your service availability, and stops resource-hogging traffic in its tracks.

Rate limiting at the Load Balancer level using AWS WAF helps you control the number of requests a user (typically identified by IP) can make to your application through the load balancer (like Application Load Balancer – ALB). This helps defend against abuse, bots, and certain denial-of-service patterns before traffic hits your application layer.

Why Use Rate Limiting with WAF?

- Protect against brute-force attacks

- Throttle API abuse or scraping

- Mitigate spamming or excessive requests

- Prevent service degradation during traffic spikes

How it Works:

When integrated with an Application Load Balancer (ALB), AWS WAF can inspect incoming traffic before it's forwarded to targets. Here's what happens:

- Request hits the Load Balancer.

- WAF evaluates request against your Web ACL (Access Control List).

- If a rate-based rule is triggered (e.g., 100 requests per 5 minutes).

- AWS WAF blocks or allows the request based on your configuration.

Custom Made Rate Limiting

To implement custom made rate limit API’s, we can follow some algorithms to make an Rate Limit API, here are few

Token Bucket:

The Token Bucket algorithm is a popular method for implementing rate limiting. Imagine a bucket that fills up with tokens at a steady, fixed rate. Every time a user makes a request, one token is taken out of the bucket. If there are no tokens left, the system can either delay the request until new tokens are available or reject it outright. This ensures that users can make bursts of requests up to a limit, but not exceed the overall allowed rate.

Pros:

- Efficient Resource Usage

- Flexible and allow burst traffic

Cons:

- Burst Traffic Can Still Happen: Sudden bursts of traffic are allowed if the bucket is full, which might overwhelm downstream systems if not handled properly.

- Requires Storage for Each Client

Leaky Bucket:

The Leaky Bucket algorithm works similarly to water leaking from a bucket at a constant rate. Incoming requests are added to the bucket, and they are processed at a fixed rate. If the bucket overflows, the requests are dropped.

Pros:

- Prevents Bursty Traffic

- Simple Implementation

Cons:

- Doesn’t Support Bursts

- Queue Can Overflow

- Less Flexible Than Token Bucket

Conclusion:

Rate limiting is a vital technique in modern systems to ensure reliability, security, and fairness in how resources are accessed. Whether you choose to implement it using Redis, AWS WAF, or a custom algorithm like Token Bucket or Leaky Bucket, the core principles remain the same: control traffic, prevent abuse, and protect your infrastructure. With proper implementation, rate limiting becomes not just a safeguard, but a smart way to maintain performance and user satisfaction across your platform.